The term “Feature Engineering ” refers to various processes that work on characteristics of the dataset, making them more suitable for application in the modeling. Feature Engineering is difficult since it can be extremely time-consuming and require an in-depth understanding of the features that one is working with. The modeling and learning algorithms depend heavily on how the features are displayed, and it is essential to align the features with the operation of the algorithms. Features Engineering is the process of altering the appearance of features to ensure that the maximum information can be extracted from them.

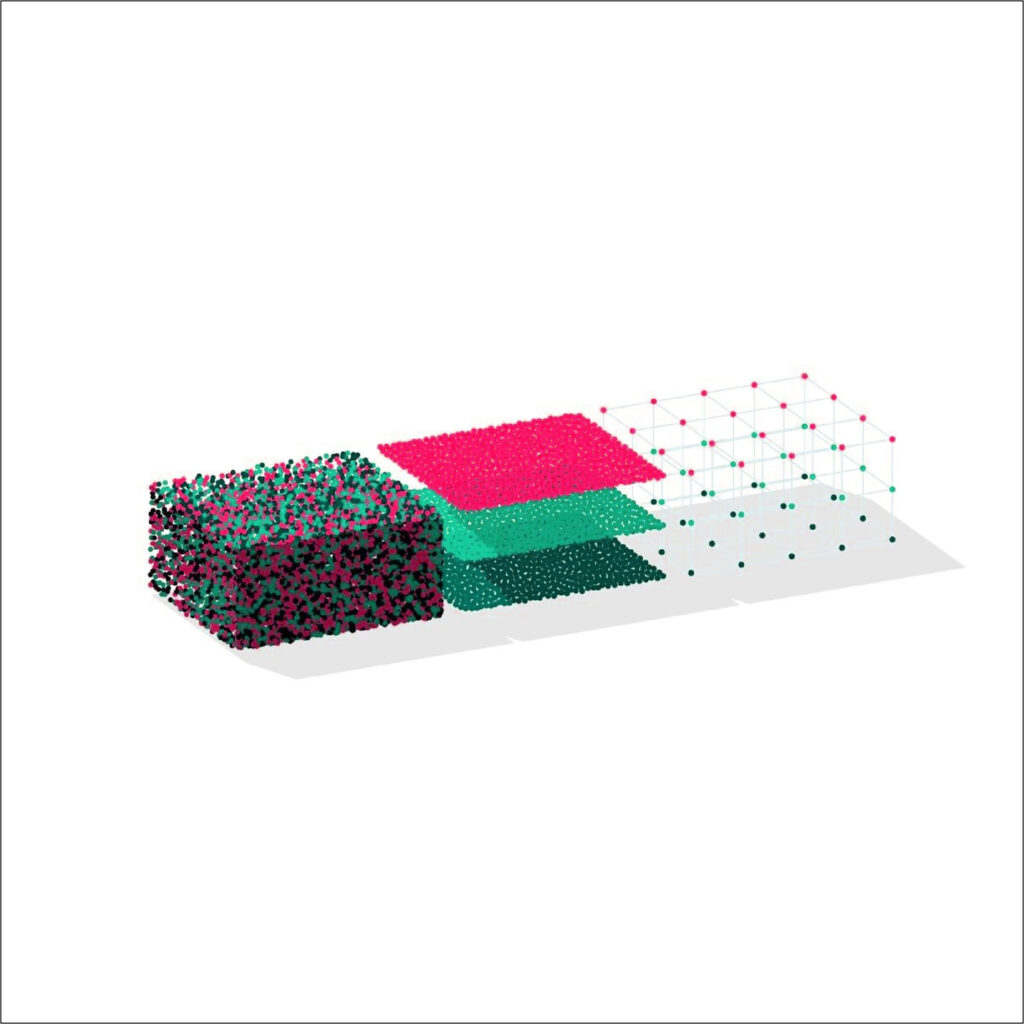

It is necessary to remember that the terms data mining and exploration, data cleansing and feature engineering, and data preparation may be confusing because the tasks carried out under each could overlap. Therefore some might consider feature selection or feature scaling as a part of feature engineering. However, in this section, feature transformation features, feature building, feature scaling, and feature reduction are all considered part of feature engineering.

The methods of Feature Scaling are utilized to allow the features to be the same size. Scaling is especially important for algorithms that rely on the distance metric to aid in their function. Features Transformation On the contrary, is employed when features are not directly employed because the data is nonlinear or skewed and therefore requires some sort of transformation.

Feature Construction deals with the ways of developing new features out of existing features that aid in improving the predictive abilities of the algorithms.

Feature Reduction can be described as the reverse of Feature Construction, in which we decrease the number of features using different methods. It is essential to cut down on feature count because specific algorithms require; too many features could negatively impact the efficiency of the model, which is why the methods used to select features are employed.

The 4 aspects that comprise feature engineering will be presented in the blogs below.

The process of transforming the appearance of a feature is to replace the feature’s observations with the function. It is usually done to change the relationship an independent feature shares with its dependent variable. The common reason for this is to alter the relationship from non-linear. Transformations can also be performed when data is biased, and it is necessary to normalize the distribution. Transformation methods comprise Logarithm and Square Root Cube Root Arcsine square root, etc.

There are a variety of methods and motives for creating features. Encoding techniques are employed to construct new numerical features from previously used categorical characteristics. This allows the use of these features in algorithms where categorical characteristics cannot be taken into consideration. However, it is possible to use derived variables that, through the use of a combination of features, we can come up with a brand new collection of features capable of giving us more details. Binning is another idea under feature construction in which new categorical features are constructed by converting existing categorical features.

If the features need to be in the same order, features are scaled up; this is done by feature scaling. In different algorithms, feature scaling blocks from giving more weightage to particular features. However, in the case of algorithms like K methods, which utilize distance metrics such as Euclidean distance for their operation, having features of the same size is vital. The features have to be scaled to ensure the proper functioning of Optimization techniques like Gradient Descent or features extraction techniques like Principal Component Analysis. The technique of scaling features is called Normalisation. The values of the features are adjusted to be between 1 or -1 or 1. Other significant methods include method Z scoring normalization.

The issue of multicollinearity is the cause of the most difficult problem in data modeling: Overfitting. Multicollinearity arises from having features that are correlated. There are a variety of methods developed to identify these variables and eliminate them. The two main topics that fall under the features reduction process are feature selection as well as feature extraction. The methods for Feature Selection simply select the appropriate, exclusive set of features that will limit the overfitting problem. At the same time, the Feature Extraction approach doesn’t employ an “in or out” method and instead focuses on extracting maximum information from all features. Therefore, unlike feature selection, it doesn’t eliminate features but changes the feature space to find the uncorrelated elements. Other classification techniques, such as Factor Analysis, can also be employed to reduce feature size.