The Unsupervised Learning setup does not require the algorithm to predict anything specific. Instead, the algorithm detects patterns in the data, and the user is free to interpret them and draw conclusions.

Unlike Supervised Learning, the data does not have a dependent variable with class labels. This setup presents the biggest problem. Without class labels, it isn’t easy to assess the performance of an algorithm. Unsupervised learning algorithms present the greatest challenge to the user. The user must determine if the output is useful and provide any insights. This is in contrast to Supervised Learning algorithms. There are no quantitative ways to evaluate the model’s performance.

The most common tasks in Unsupervised Learning are clustering, dimensionality reduction, and anomaly detection (density estimate).

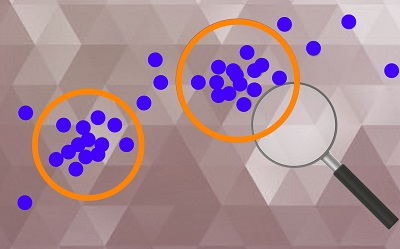

Clustering is the most common problem that can be solved in an Unsupervised Learning system. This is where algorithms are used to identify patterns in data and create clusters of data points similar in some way to one another. K-means and Hierarchical clustering are the most popular methods of clustering.

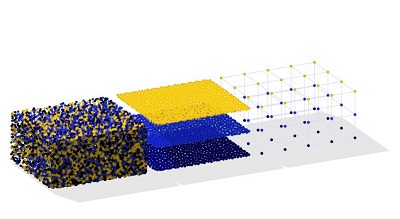

Multicollinearity is the root problem in data modeling. Overfitting is the result. Many algorithms that work in an unsupervised learning environment can help reduce the data’s dimensions. Principal Component Analysis is one of them. Factor Analysis is another.

Many density estimation methods and others that work in an unsupervised learning environment are used to detect anomalies. These are then treated as outliers which help in cleaning up the data. One-Class SVM and Kernel Density estimation are the most popular Anomaly Detection algorithm.