Binning, which is the creation of new variables through transforming Numerical Variables into Categorical Varsities, was discussed in the previous blog. Binning is reversed, and Encoding can be described as creating new variables by transforming categorical variables into numerical variables. This is because machine learning algorithms can’t read categorical data, so they must be numerical. One simple example is gender. An algorithm such as Linear Regression (or SVM) won’t understand the terms “Male” and “Female.” To make this variable useful for a learning algorithm, each term (categories) is converted into a binary attribute (0 and 1) so that 0 represents males and 1 represents females. The algorithm can then take these values into account.

Encoding refers to the conversion of numerical features into categorical features.

Method to Encode

There are two main methods for encoding: Binary Encoding and Target Based Encoding.

Binary Coding

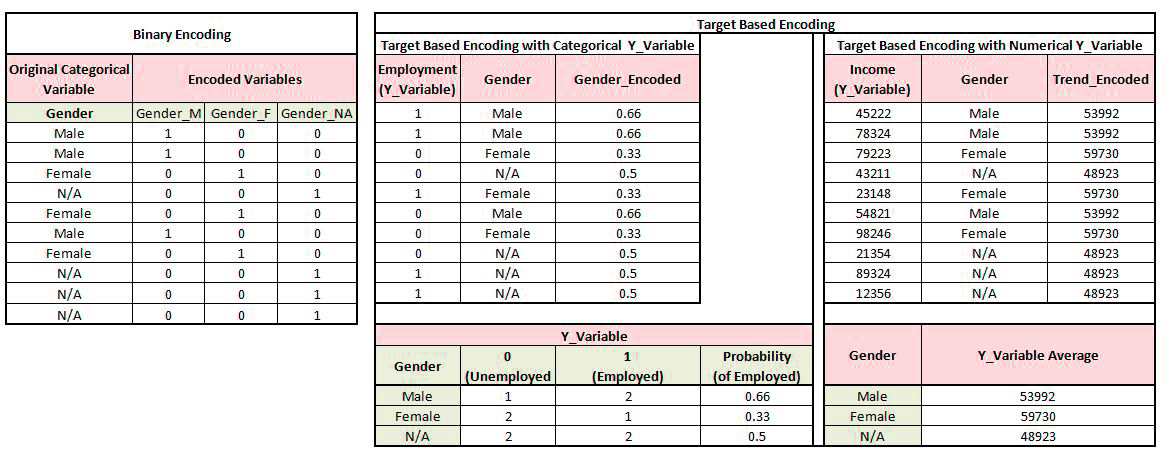

Binary Encoding uses binary values to indicate whether a particular category is present. A separate variable with values 0 or 1 is created for each category in a feature. The presence of a particular category is indicated by 1 and absence by 0. This can lead to an increase in data dimensions, especially when a variable contains a large number of categories. The curse of dimensionality occurs here. This means that a model cannot predict and perform well if the data has very large dimensions. If the dimensions are too small, it can lead to under-fitting. The model may need additional information (i.e., if the dimensions are too low, it can cause under-fitting, and the model may need additional information(i.e., This can lead to various problems in the modeling process, such as an increase in data dimensions due to encoding.

Target Based Encoding

Target Based Encoding is another method. This is different from Binary Encoding. Instead of creating separate variables for each feature, a single numerical feature is created for each categorical variable. Each category’s probability of being the target label is given. For example, let’s say we have two variables: Gender and Employment. Gender is the independent variable and has two categories – Male and Female – and the Y Variable. The dependent variable is Employment. It has two target labels – 0 for the unemployed and 1 for the employed. In the new Numerical variable for the feature Gender, instead of the category “Male,” the corresponding probability of reaching the target will be shown. The same process will be used for the other category. If Y is numerical, the average of the target variables is used. Binary encoding is more powerful than target-based encoding. It is dependent on the distribution of the target labels. Target-based Encoding is less common than Binary Encoding.

Binary v/s Target Based Encoding

Scalar Encoder v/s One Hot Encoding v/s Dummy Variables

Encoding via Scalar Encoder

Scalar Encoders are used when there is only one category in a feature. Scalar Encoder is useful when only two categories exist in a feature, such as Gender. It can be used to give Males a representative value of 0 and Females a value of 1, or vice versa. The dataset’s dimensions are maintained, and the categorical variable can be dropped once encoded.

One Hot Encoding

This term is often used in machine-learning models. A separate variable is created for each feature category. It has values 0 or 1 and works as an ‘On/Off switch. 1 is assigned to a specific category and 0 to a non-existent category. One Hot Encoding creates 10 variables for a feature with 10 (N) categories.

Encoding using dummy variables

There is only one difference between Dummy variables and One hot encoded variable. N-1 variables are created, not One hot encoded. One of the dummy variables has been left out because it serves as the basic assumption for avoiding perfect multicollinearity among datasets. The term Dummy variable is derived from statistics, while One Hot Encoding comes from computer science. Electronics actually borrowed it.

These encoding methods (One hot and Dummy variables) can result in very large dimensions, leading to overfitting or making the model computationally costly. Encoding can lead to high cardinality and a curse of dimensionality. This can be overcome by using dimensionality reduction methods such as PCA.

Other Techniques

Label Encoder

Label Encoding is a simple method of encoding labels. It encodes labels with values between 0 to n_classes-1. We have, for example, a feature called “colors” that has three categories: Blue, Greed, and Red. The Label Encoder provided numerical values for each category so that, for instance, Blue has 1 and Green 2, respectively, while Red has 3. This method of encoding has serious problems. For example, if the values have no natural ordering (if colors have no weight), then these encoded variables can give us misleading results. Any mean derived from such encoded variables will be incorrect. The model will make wrong predictions if such values are used in learning algorithms.

Simple Replace

Some numerals may be provided as text. We have a dataset that shows the number of cars owned in a household as “one,” “two,” or “three” rather than 1, 2, 2. These situations can be converted into numerical variables by simply finding the text and replacing it with its numerical equivalent.

You can use any of the encoding methods described above to create feature construction. Each method has its own advantages and disadvantages. One-Hot Encoding, which is the most common and widely used method of encoding, must be considered. However, it should be remembered that the curse of dimensionality must also be taken into consideration.